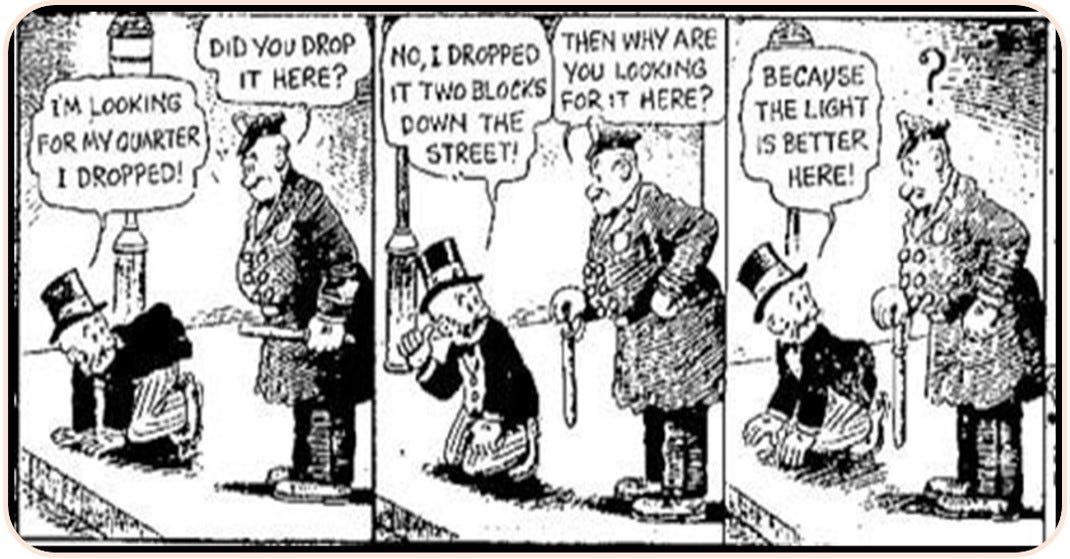

“Science is a bit like the joke about the drunk who is looking under a lamppost for a key that he has lost on the other side of the street, because that’s where the light is. It has no other choice.”

Noam Chomsky1

We are in the dark regarding how the brain works. This has been the case for a long time. But nature abhors a vacuum, whether it is low air pressure or understanding. So we illuminate the dark places in our minds with speculation. Science proposes hypotheses and models to test, but these proposals are often contingent on unspoken paradigms and implicit metaphors. Science ends up validating a metaphor. Inevitably, cracks develop in the current paradigm and the (younger half of the) scientific community shifts to a new paradigm. We collectively crawl from one street light to the next because the light is better there.

The paragraph above uses the seeing-is-understanding metaphor to compare our lack of understanding of the brain to being in the dark. Seeing and understanding are analogous because the processes of understanding and seeing both share a sense of self-awareness: we know whether we can see or understand something. Regarding the brain, our understanding is nascent at best…we are in the dark because we cannot see (understand) answers to our questions.

Metaphor is a figurative device that makes reading more interesting and less abstract. However, it is also used to make complex things appear simpler than they are. In that role, metaphor does not explain the complex thing, but it makes the complex thing appear more familiar. One example is the brain-is-a-computer metaphor. This metaphor assigns the satisfying feeling of a (more) familiar computer to that of an incomprehensible brain because of a few analogous attributes (nerve axons are like wires, senses are input, action is output, neurons are memory). But the metaphor also obscures most brain attributes, which have nothing at all to do with computers: consciousness, emotion, evolution, and so on. Metaphors are a conceptual bait-and-switch.

In this post, I do my best to shake you out of your drunken stupor so that you can see cognition in a clearer light. We begin by visiting several lampposts on the road to finding a key to cognition.

Early Metaphors of Cognition

Socrates (470—399 BC) compared the mind to a wax tablet. Similarly, the seventeenth-century philosopher John Locke considered the mind a “blank slate” on which experience wrote “sense data”. For Sigmund Freud, the mind was a hydraulic system that built up pressure that occasionally required release. Various theorists have described the brain or mind as a cathedral, aviary, (Cartesian) theater, filing cabinet, clockwork mechanism, camera obscura, and phonograph.2 Philosopher John Searle provides a few more:3

“Because we do not understand the brain very well we are constantly tempted to use the latest technology as a model for trying to understand it. In my childhood we were always assured that the brain was a telephone switchboard. (‘What else could it be?’) I was amused to see that Sherrington, the great British neuroscientist, thought that the brain worked like a telegraph system. Freud often compared the brain to hydraulic and electro-magnetic systems. Leibniz compared it to a mill, and I am told some of the ancient Greeks thought the brain functions like a catapult. At present, obviously, the metaphor is the digital computer.”

The digital computer is simply the latest in a long history of metaphors for minds that leverage the latest technology of the day. That alone should make us skeptical of any claim that any metaphor or paradigm has comprehensive explanatory power.

Brain-is-a-computer Metaphor

In 1995, cognitive anthropologist Edwin Hutchins said, “The last 30 years of cognitive science can be seen as attempts to remake the person in the image of the computer.” Not much has changed in the 30 years since that quote. The brain-is-a-computer metaphor is a fundamental paradigm for the cognitive sciences, cognitive psychology, and artificial intelligence. Thinking involves computation and the processing of data. Nerve cell bodies and dendrites are like memories. Nerve axons are like electronic conductors. Who would disagree with that?

There are many problems with the Brain-is-a-computer metaphor and I have addressed them elsewhere in this Substack. See AI Reset, Why Emotion Matters, and Beyond AI. The biggest problem may be that it is the most entrenched metaphor used in popular culture...to where we remake ourselves in the image of a computer or robot.

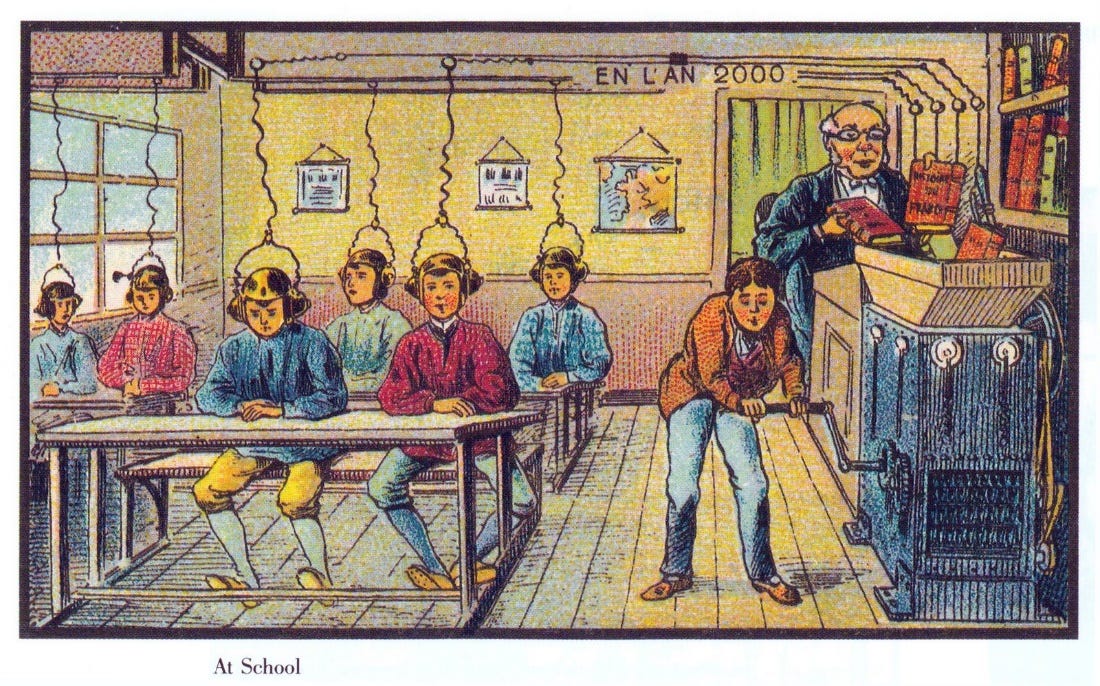

Related to the brain-is-a-computer metaphor is the brain-is-an-empty-vessel metaphor. When a knowledge engineer creates an artificial neural network application, they first gather training data. The engineer then feeds this data into the AI application. This brain-is-an-empty-vessel metaphor has led some teachers to believe that their job is to insert knowledge into their student’s brains—as you might pump gas into an automobile. These educators would make better knowledge engineers than teachers. A better metaphor for teachers is educator-as-gourmet-chef. An educator/chef strives to make knowledge tasty and nutritious for hungry minds. Unlike a mindless, passive container, a hungry brain actively seeks or consumes what it deems important or tasty.

This is how one programs a neural network, not a human.

Dynamical System Metaphor

[Biological brains are,] “to put it bluntly, bad at logic, good at Frisbee”

Andy Clark4

In a distant second place behind the brain-is-a-computer metaphor is the brain-is-a-dynamical-system metaphor. It was actually the dominant metaphor in the 1950s when the study of cybernetics was popular. I will give two examples of dynamical systems.

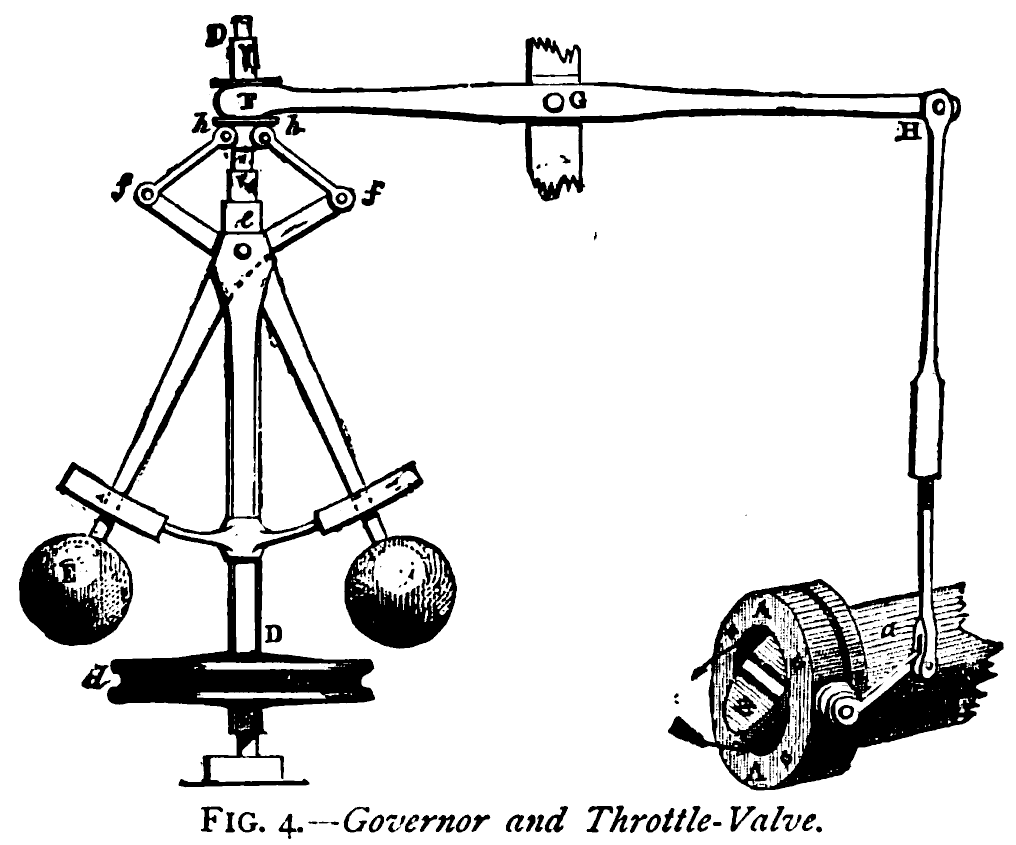

Consider a centrifugal governor used to control steam engines.5 It comprises an assembly of two weights that are spun by the action of a steam engine. When a steam engine goes faster, the weights move outwards because of centrifugal force. This moves a linkage which closes a value which reduces the admission of steam into the cylinders. In this way, the governor maintains a constant speed of the steam engine. The Watt governor as a purely mechanical robot that controls a steam engine.

Illustration of a centrifugal steam governor- Image from "Discoveries & Inventions of the Nineteenth Century" by R. Routledge, 13th edition, published 1900., Public Domain, https://commons.wikimedia.org/w/index.php?curid=231047

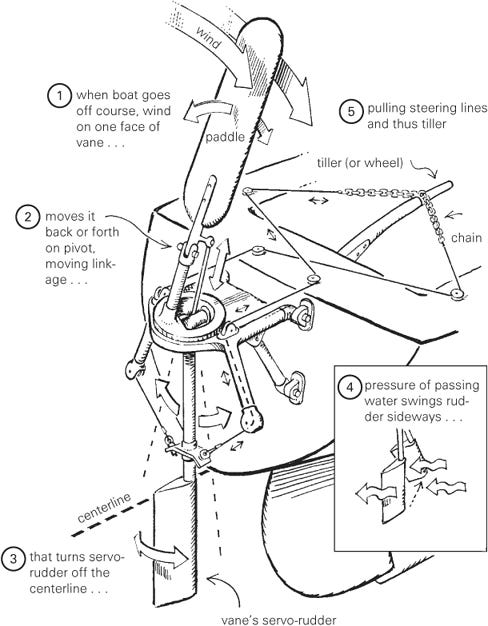

Now I want you to imagine a modern-day device that steers a sailboat without a sailor or computer. Ocean-going sailboats use this self-steering wind vane. It is entirely mechanical and guided by a wind vane.6 Think of it as a mechanical robot that steers a sailboat.

I’ve drawn your attention to two mechanical ‘robots’ because each is an example of a dynamical system. These systems are each non-computational and non-representational but they both automate an important task. A growing number of cognitive scientists embrace some form of embodied cognition…as I do. The radical arm of embodied cognition believes that brains are both entirely non-computational and non-representational7. The downside of dynamical systems is that they provide little insight into how humans perform logical reasoning. However, as Andy Clark points out, we are bad at logic but good at Frisbee. Dynamical systems provide many analogous parallels with most of what we are good at. I list some of these below.

State Space—In dynamical systems, state space represents all states of a system. Computationally or mathematically, Markov models are often used to represent state space. In cognition, state space can represent all possible mental states someone can be in at a time. My work uses behavior trees because, unlike Markov processes, behavior trees are less brittle, they require no global variables, and they can learn new states and behaviors.8

Attractors—Attractors fuse data, reduce the dimensionality of data, and seek stable states. Attractor networks in honey bees integrate magnetic, visual, and vestibular sensations into a single, reliable sense of direction. The computer analog of an attractor network is a Kalman filter.

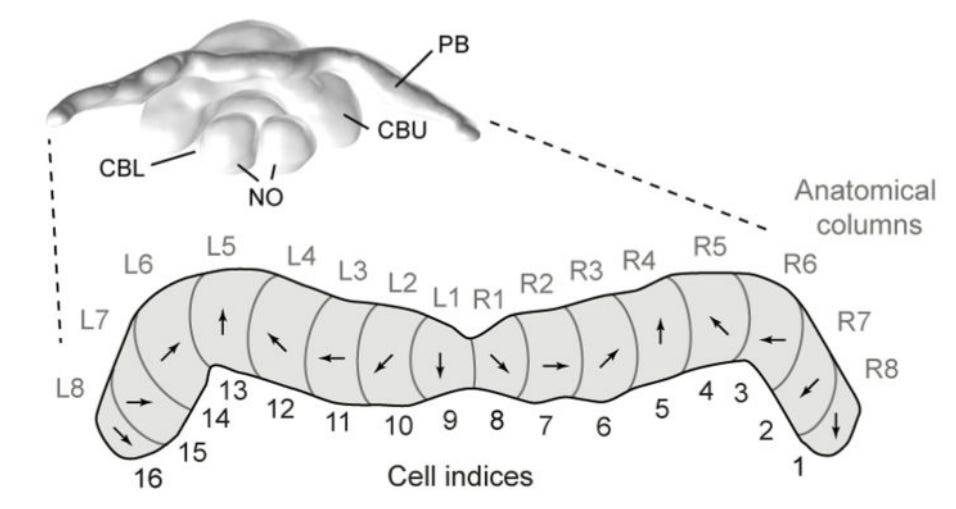

The protocerebral bridge (PB) in the central complex of an insect's brain is an attractor network for determining a single compass heading from multiple sources. Flies, locusts, butterflies, beetles, and bees have an attractor network in their protocerebral bridge.9

Emergence—Complex dynamics can emerge from simple rules in a dynamical system. The same occurs in organisms. A good example of this is how fixed action patterns enable honey bees to defend their hive from a large predator, such as a skunk or a bear. To read more on this, read my post, How Beekeepers Avoid Getting Stung.

Deep Coupling between System and Environment—Both dynamical systems and cognition are systems that continuously adapt to a changing environment. A dynamical system does not need to construct a model of the world because it continuously senses and acts in the real world. This coupling is a circuit, not an arc or reflex (as found in most computer/AI applications) with a beginning and an end.

Time is an Integral Part of Dynamical Systems—The same is not true in the brain-is-a-computer metaphor. Having programmed computers to fly multirotor drones, I can tell you that real-time operating systems are rare and that self-navigating drones cannot afford to wait on an overloaded CPU.

There is nothing to prevent a computer from implementing a dynamical system. Computer games simulate trajectories, swings, crashes, momentum and more. But as a metaphor, dynamical systems allow us to consider a brain that differs from one described as a computer. Connectionist approaches are not incompatible with dynamical systems. It might be easier to imagine a connectionist dynamic system in terms of analog or fluidic10 circuits instead of digital circuits.

The dynamical systems metaphor, like all metaphors, comes with some rather enormous gaps in explaining cognition. As mentioned above, it is a poor metaphor for explaining symbolic communication, language, or logical reasoning. Personally, I believe that the infant mammalian brain arrives non-symbolic and non-representational. I am currently working on a new connectionist architecture to show how action-oriented memory becomes symbolic through a process of nominalization.

Embodied Cognition As Combustion in Complicated Circumstances

My favorite metaphor for embodied cognition is a very different dynamical system. The Austrian physicist and philosopher Ernst Mach suggested it over 100 years ago to describe animal life. It applies equally well to natural intelligence:

"The best physical image of a living process is still afforded by a conflagration or some similar process which automatically transfers itself to the environment. A conflagration keeps itself going, produces its own combustion-temperature, brings neighbouring bodies up to that temperature and thereby drags them into the process, assimilates and grows, expands and propagates itself. Nay, animal life itself is nothing but combustion in complicated circumstances."

In the same way that a fire cannot exist apart from either heat or fuel, adaptive embodied cognition cannot exist apart from the environment it learns from and adapts to.

Fire is enigmatic, like the brain. A high-temperature conflagration may include matter that is neither solid, gas, or liquid: a supercharged fire contains a plasma. Plasma, like consciousness, is mysterious and poorly understood, but it is neither supernatural nor beyond the purview of scientific inquiry.

You can describe fire as having a social quality. In conflagration, as in sexual and social bonding, sparks and individual organisms increase in number and so increase their chances for survival. Ultimately, all fires and species become extinct.

This metaphor's best feature is that nobody is going to confuse cognition literally with a conflagration.

I haven’t reviewed all the metaphors available for cognition. The brain-is-a-quantum-computer is another one. It’s unique because it employs one mystery (quantum entanglement) to explain another mystery (consciousness). As technology advances, there will be others.

The Best Use of Metaphor

What should become obvious at this is point is that metaphors make poor models because most features are not analogous between model and subject. Metaphor emphasizes the few similarities and hides the many differences. It delivers a feeling of understanding without actual understanding. That is why I refer to metaphor as a conceptual bait-and-switch.

Despite metaphor’s failure as a model, it often becomes the implicit basis for a scientific paradigm—the most recent being the brain-is-a-computer metaphor. Science proposes hypotheses and models to test, but these tests end up validating (or invalidating) the metaphor—not the brain itself. Inevitably, cracks develop in the current paradigm and the scientific community grasps for a new metaphor... on to another lamppost.

The reason the brain has inspired so many metaphors is because it is unlike anything in the world and a metaphor does not exist that can describe it comprehensively. George Box said "All models are wrong, but some are useful". I think we can say the same thing about metaphors. It's best not to get too attached to any single model, paradigm, or metaphor.

So what is the best metaphor? Metaphors are useful because they give you a handle for something that might otherwise be difficult to reference or conceptualize. Maybe we should learn from physics: when one metaphor fails (e.g., radiation-is-a-stream-of-high-energy-particles), simply add a second one (e.g., radiation-is-an-electromagnetic-wave).

When we consider the human nervous system, the brain-is-a-dynamic-system metaphor is useful for describing homeostasis or how we play Frisbee and a lot of cognition in nonhuman animals. The brain-is-a-computer is better at describing how we reason or use representation... we process representations. In either case, we need to remember that the feeling of closure we get from metaphor is not real. Metaphor gives us the feeling that we understand something without real understanding. Like the drunk looking for his keys under a lamppost, that feeling should not be the primary motivation for our behavior.

The book Metaphors We Live By written by George Lakoff and Mark Johnson inspires the title of this post. That book and Lakoff's Women, Fire, and Dangerous Things got me interested in embodied cognition.

Lakoff, G., & Johnson, M. (1981). Metaphors we live by. University of Chicago Press.

Lakoff, G. (1987). Women, fire, and dangerous things: What categories reveal about the mind. University of Chicago Press.

Noam Chomsky quoted in Barsky, R. F. (1997). Noam Chomsky: A life of dissent. The MIT Press.

Barrett, L. (2011). Beyond the brain: How body and environment shape animal and human minds. Princeton University Press.

Searle, John R. (1984). Minds, Brains and Science. Cambridge: Harvard University Press, p 44.

Clark, A. (2004). Natural-born cyborgs: Minds, technologies, and the future of human intelligence. Oxford University Press, USA.

It is hard to argue that no representations exist in the human brain. When I imagine a tree with my eyes closed, something must be representing a tree which is not a tree itself or even a perceptual projection of a tree. See also:

Nagel, Saskia K.. “Embodied, Dynamical Representationalism - Representations in Cognitive Science.” (2005).

Stone, Thomas & Webb, Barbara & Adden, Andrea & Weddig, Nicolai & Honkanen, Anna & Templin, Rachel & Wcislo, William & Scimeca, Luca & Warrant, Eric & Heinze, Stanley. (2017). An Anatomically Constrained Model for Path Integration in the Bee Brain. Current Biology. 27. 10.1016/j. cub.2017.08.052.

https://en.wikipedia.org/wiki/Fluidics. Check out the MONIAC Computer, built in 1949. It was a fluid-based analog computer capable of complex simulations superior to the digital computers of the day.

Everything we think and perceive are metaphors all the way down. Even the concrete redness of red and the pressure of the chair pushing against my buttocks are metaphors.

Most controversies around illusionist theories of mind and consciousness would dissipate if they were called metaphorist.

Hi Tom. I’ve been putting off what to do about this post of yours. Though extremely well written, and though I certainly have great respect for you, I also can’t get over how much I cognitively depend upon good metaphors to help me figure things out. And though like you I consider functional computationalists quite misguided, in many ways I also consider the brain/computer analogy to be extremely helpful. But if blogging has taught me anything, it’s that people ultimately just play this game in order to be agreed with. So whenever I talk with people who have opposing views, I find them to generally get angry with me when I make effective opposing arguments. And do I get angry when it goes the other way? I suppose I’d say what they probably say — I don’t recall anyone ever making a good argument against one of my positions, or if they did, I’ve certainly never gotten angry! So it goes…. Anyway I do appreciate you, but also have some opposing positions. I need to figure out how to effectively communicate them for my own blog rather than always just provide commentary at the blogs of others (and angering them too often). I doubt my writing skills will ever be nearly as good as yours, but need to try anyway.