This blog and others (see Gary Marcus on AI) write about the shortcomings of AI and why it will never scale to human-level cognition. But criticism is easy. It is hard to criticize AI when no compelling alternative exists. That is what drives me. In this blog, I outline my search for a more biological approach to machine intelligence.

Today, embodied cognition is a theoretical idea. At its core, it asks how knowledge and reasoning originate in the world. You could answer evolution but that does not provide enough detail to actually build and test something. I am an engineer: my nature demands that I build something and test it before I can claim to understand it.

There are plenty of robots programmed to move their arms. But nobody has built a robot that randomly waves its arms and infers, for the first time, that those arms are part of said robot and that they provide affordances. Why is that important? It is because human-level cognition requires a brain that can discover and learn on its own. It is not a passive container of knowledge to be filled by an uber intelligent being nor a knowledge vacuum that sucks up every detail and experience. Rather, it is an active participant adapting to its environment through a selective process that bootstraps learned behaviors from genetically-determined innate behaviors, goals, and emotions. Anyone that has raised an infant knows this to be true.

Artificial Intelligence is a powerful tool. It is auto-complete on steroids. But its output is derived from information provided by uber intelligences. It has no faculty for solving original problems. But AI is now commercially valuable and many believe (wrongly) that AI will scale into human-level competence (a goal with the terrible name of Artificial General Intelligence or AGI). So, for the time being, AI will dominate research and the marketplace. But I have no doubt that yet another AI Winter is approaching.

I seek to understand what I call Natural Intelligence. It seeks to mirror the natural algorithms, computational processes, and adaptation of animal cognition in robots. To that end, I reverse engineered how a honey bee navigates to a specific bed of flowers--potentially miles distant--and returns to her colony behind a tiny hole in a tree in a forest of similar looking trees. Why is this important?

I suspect that navigation provides a key part of the computational framework for problem solving, tool making and language acquisition that AI lacks. You can see how the challenges of navigation map onto other domains, such as baking a cake.

By duplicating the algorithms and computational processes used in honey bees and mammals to navigate, I hope to explain the mechanism of baking of cakes and solving every other problem. There is a lot I am leaving out here:

How and why visual and acoustic imagery (iconic representations) get transformed into indices and symbols. In other words, what are the mechanics of Peircean semiotics.

How analogy and metaphor enables innovation (mechanics of invention and tool making)

How intangible words and concepts--ideas that transcend perception-- originate. Examples include: "freedom", "justice", "future", and "unicorn".

I will have reached my goal when I can show (not only explain) a computer that learns as a child learns and converses in words while lacking any internal representations or innate data formats (such as ASCII or MP4…human conventions) or any knowledge that did not originate through the senses.

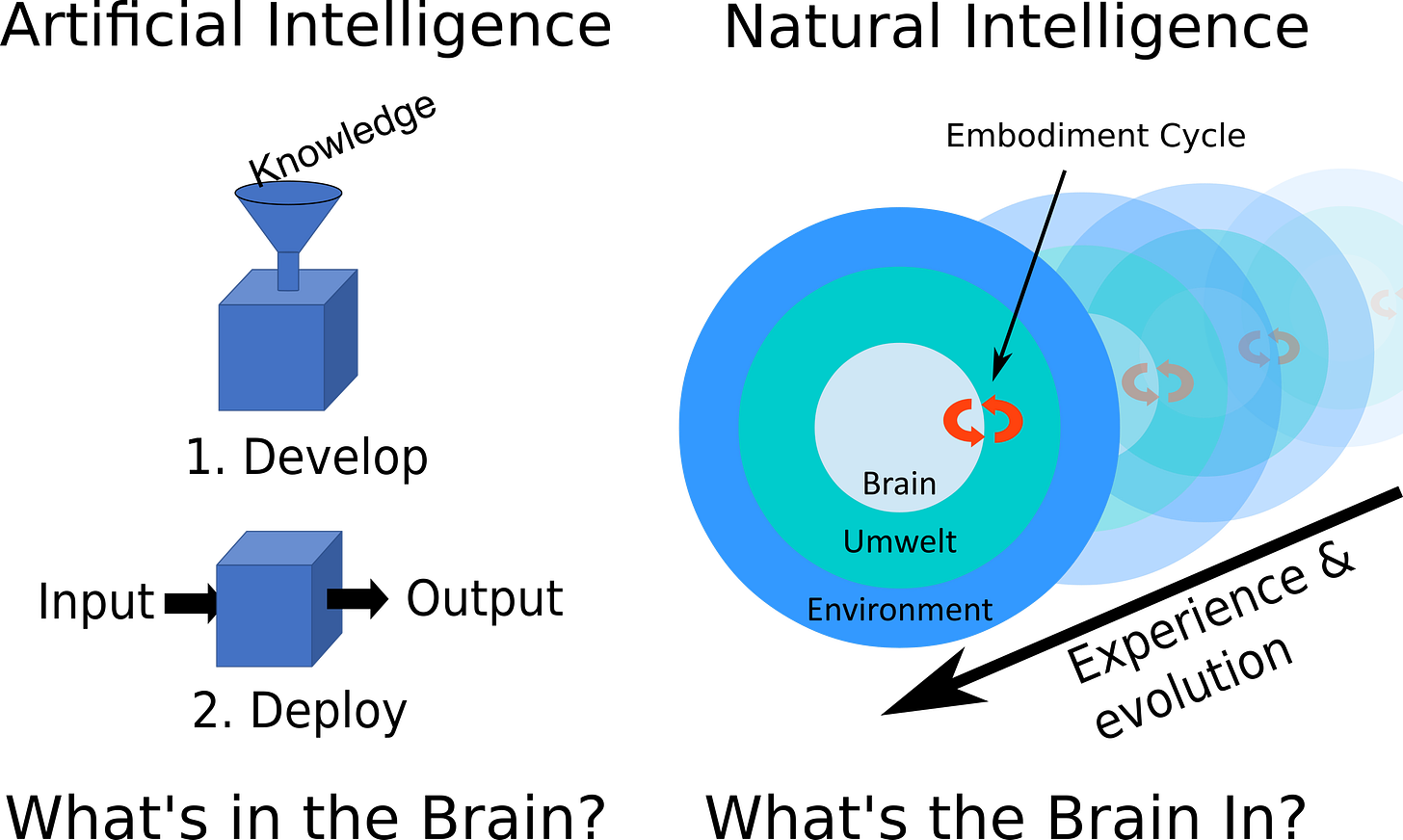

One way that embodied cognition differs from AI is how it interacts with its environment. If you raise an infant mammal in a box, devoid of socialization or interaction with a rich environment, it grows up with permanent cognitive impairment. That is because the perception of one's own action are necessary for development and learning. Unlike embodied cognition, AI is programmed with training data from a knowledge engineer. AI's process topology is not a perception-action loop (what I call an embodiment cycle) but a reflexive arc: ask a question (input), get an answer (output). Once deployed, an AI learns nothing. This is illustrated below. AI asks what is in the brain; natural intelligence asks what is the brain in.

This suggests to me that to develop natural intelligence capable of autonomous learning, one needs a robot that can act and sense in its environment. It must be able to differentiate between ego- or self-motion and motion in the environment. Additionally, it must be able to differentiate between animate and inanimate objects. It must have some sense of place.

Due my interest in both honey bees and multirotor drones, I've combined both interests by building flying robots that navigate like honey bees. Like honey bees, multirotor drones come with a plethora of sensors . Many are redundant, as they are in honey bees.

3 axis accelerometers

3 axis gyroscope

3 axis magnetometer

Down-looking optic flow sensor

Down-looking range (lidar and sonar) sensors

To this I add a stereo camera that gives me forward range and color imagery. No, honey bees do not have stereo vision, but they do sense distance via a flight pattern called saccades (a different sense of the term from retinal saccades in mammals). All research drones also contain GPS. I use GPS only as a benchmark for the GPS-denied navigation I am simulating.

The photo below shows some of the drones I have flown for fun and research. The research ones include an Ardupilot flight controller and a raspberry pi computer that I program in python. The quadcopter in the upper left is my primary research platform today.

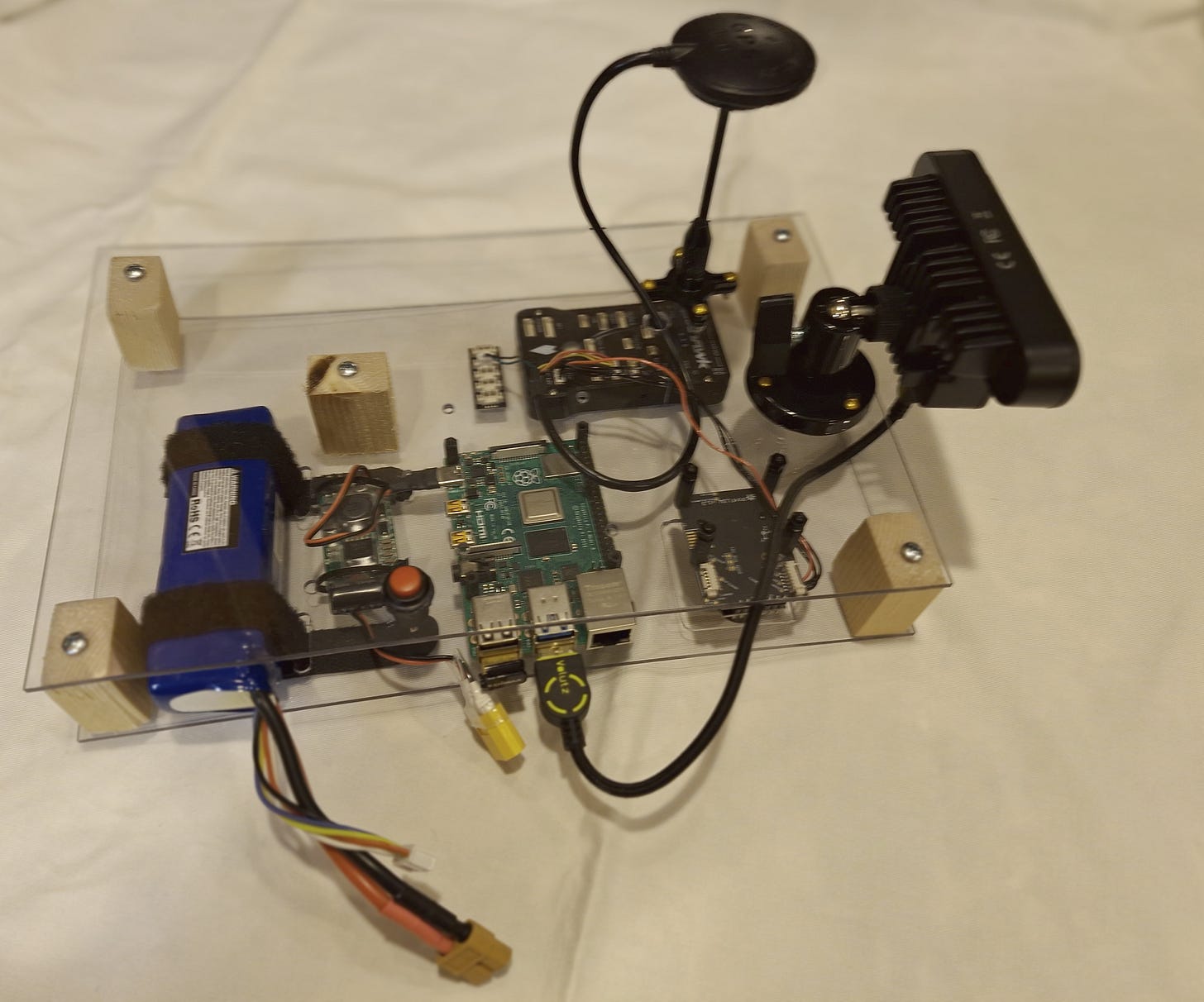

Using drones as an experimental platform is both risky and time consuming. It is risky because the possibility of crashing is greatest when the drone flies itself. Flying drones is time consuming because--like a space launch or a commercial airplane flight--there are checklists to review and many parts to get in order prior to launch. As a time and money saver, I have built a mobile platform containing all these same sensors, WIFI, raspberry pi, sonar ranger, optic flow, stereo camera, and battery.

My testing regime works like this:

I write and test code on a desktop development machine in Python. I may use an Ardupilot simulator and/or previously recorded video. Once I am confident that it works…

I download the program to my mobile unit and evaluate it using an actual Ardupilot flight controller and sensors by walking around. If that works as expected, then…

I download the program to a Raspberry Pi on Big Bird, my main test drone. I have programmed a switch on my radio control (RC) transmitter to give or take back manual control from the Raspberry Pi on the drone.

Some readers will have a bone to pick with me: "What is a computer program if not an internal representation?" That is a valid and astute observation. No intelligent living organism--e Coli bacteria or human infant--arrives in the world without some genetically-hardwired innate behavior. It is from these simple innate behaviors that learned behaviors develop. Learning and adaptation in each species is a bootstrap process that begins from some starting point. My programs are analogous those starting points. They are constrained by several requirements:

No innate declarative or symbolic knowledge. Honey bees and my drones need to first learn how to recognize their own home as part of learning to navigate and return home. The closest thing to innate declarative knowledge in natural intelligence is simple procedural knowledge, elemental goals, and emotions. We are all born knowing--without words--that honey is good (sweet) and skinned knees (pain) is bad. This innate knowledge guides our actions and what we learn.

All computer processing must be consistent with biological processing. I could have used an Extended Kalman Filter for data fusion and filtering of noisy data. Instead, I have implemented a connectionist network called a Continuous Attractor Network. Two-dimensional CANs exist in mammals as place cells. An orientation or heading CAN has been identified within honey bees that integrates heading direction from magnetic, optical, and vestibular sources. Not all of my programming is connectionist-based. I could implement associative networks but find hash tables to be much easier and faster. I avoid iterative, recursive, and other time-indeterminate functions, however (the embodiment cycle itself is recursive).

The system must be capable of evolving. Every python program must ultimately be reduceable to a tokenized string capable of mutation. The simpler I can make that string and the more biologically plausible the tokens, the more convincing will be my model of natural intelligence. This is a constraint I keep in the back of my mind. I hope, some day, to evolve python programs as new species of robot. Part of this research is to determine what those tokens/modules represent.

Apologies if this was too much information. Now you have a better idea of why I don’t write more posts. For me, this is a passion and a hobby. I have no academic credentials but I would love to find others with the same interests. Please subscribe to this blog if you would like to be notified when I post progress reports.

What is now commonly referred to as AI is based on the exploitation (consumption) of knowledge accumulated by humans. Real intelligence - even the weakest, like that of bees - is capable of generating new knowledge, and this is the main difference between intelligence and intelligence imitation.

"I have no academic credentials but I would love to find others with the same interests" - This is exactly what I can say about myself :-)