How Mental Representations Emerge

"The key to artificial intelligence has always been the representation" Jeff Hawkins

Computers represent and store facts, images, sound, and processes (programs) in memory. These representations comprise both content (the fact, data or program itself) and its format (ASCII text, Python code, JPEG, MP4 video, etc.). The format dictates how to interpret the content’s digital ones and zeros. Lacking a format, the ones and zeros become meaningless. Standards organizations design and regulate computer data formats. The human brain differs from computers on this account.

There are no data formats in our brains…just neurons. So how does a representation emerge in a brain with meaning? How does the activation of neurons—analogous to the ones and zeros of computer memory—become conscious ideas without a format from a standard organization? The simple (but wrong) answer is that genes encode for representational formats. But I dream of unicorns! You cannot tell me that genes alone can account for my internal representation of fantasy animals or original ideas that I have not yet imagined. I have a hard time believing that my genes define a Jennifer Aniston neuron1.

Before I propose a mechanism for the emergence of representation in animals, let's get two things out of the way: an initial definition of representation and a distinction between internal and external representations.

A representation is something that substitutes or stands for something else that is not the thing itself.

The word apple (read or spoken) is a visual and auditory representation of a popular fruit. These written and spoken representations are symbolic: they are products of cultural evolution or invention and bear no resemblance themselves to the apple concept or the apple itself. This feature makes external representations useful for communication…but only within cultures sharing the same vocabulary. But I can also imagine an apple in my sleep or with my eyes closed—with no sensory awareness of a real apple or even a symbolic one—so there must be some internal APPLE concept or representation2.

In a previous post, Visual Indexing, I show how we first perceive objects as separate entities without consideration of their object properties. Then we assign object properties. And only then do we become conscious of the entity and either recognize it or ask, "what's that?" This shows how we can hold indirect internal representations void of descriptive representations.

Many nonhuman animals communicate with conspecifics even though they lack our faculty for language. Since their calls, dances, gestures, and pheromones trigger predictable behaviors in their conspecifics, I think is fair to say that their communications are successful external representations. It follows then, that these social animals have internal representations also.

External representations exist to communicate internal representations. The former cannot exist without the latter.

Internal Representations

In this post, I focus on internal representations: mental concepts represented by neurons and how they come to represent a wide range of things without preordained formats. External representations, such as words and language, are—I think—an easier problem. Like computer data formats, we invented them…over eight thousand times3.

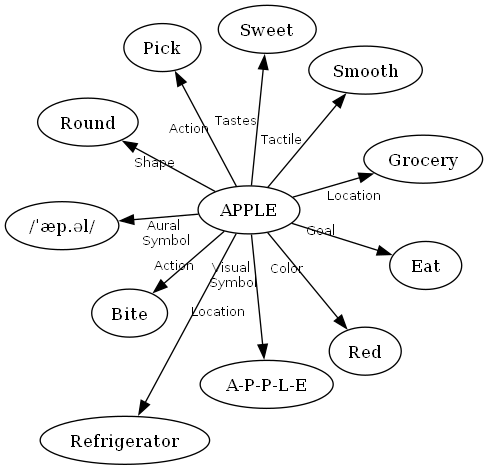

Computer scientists have represented mental concepts as semantic networks. In this model, nodes are analogous to neurons and links are analogous to synaptic connections. Below is a simple example of a semantic network.

A semantic network for the concept APPLE. APPLE (all caps) represents the mental concept of apple. A-P-P-L-E represents the visually perceived symbol (word) for the concept APPLE. /ˈæp. əl/ represents the sound of the spoken word apple. Illustration by the author.

A frame-slot representation popularized by symbolic AI of the 1980s could easily represent this semantic network:

Apple

Color: Red

Shape: Round

Taste: Sweet

Goal: Eat

Location: Grocery

My criticism of semantic networks and frame-slot structures as models for mental concepts is their reliance on external representations and formats. Properties, like color, shape, or goal, are formats that assign meaning to their associated values. What is a goal, anyway? And is an apple really round (no) or does it have some roundness (yes)? My goal here is to rid cognitive models of structures—like formats—that require the intervention or design of external intelligent agents.

What about connectionist approaches? Knowledge engineers must sort training sets into separate, meaningful categories prior to programming an artificial neural network: one set of dog pictures, another set of cat pictures, and so on. Neural networks may be non-symbolic, but that is a distinction without a difference. All meaning and data formatting still originates from an external intelligent agent. The neural networks of AI do not learn as we do—we program them (see my post AI Does Not Learn).

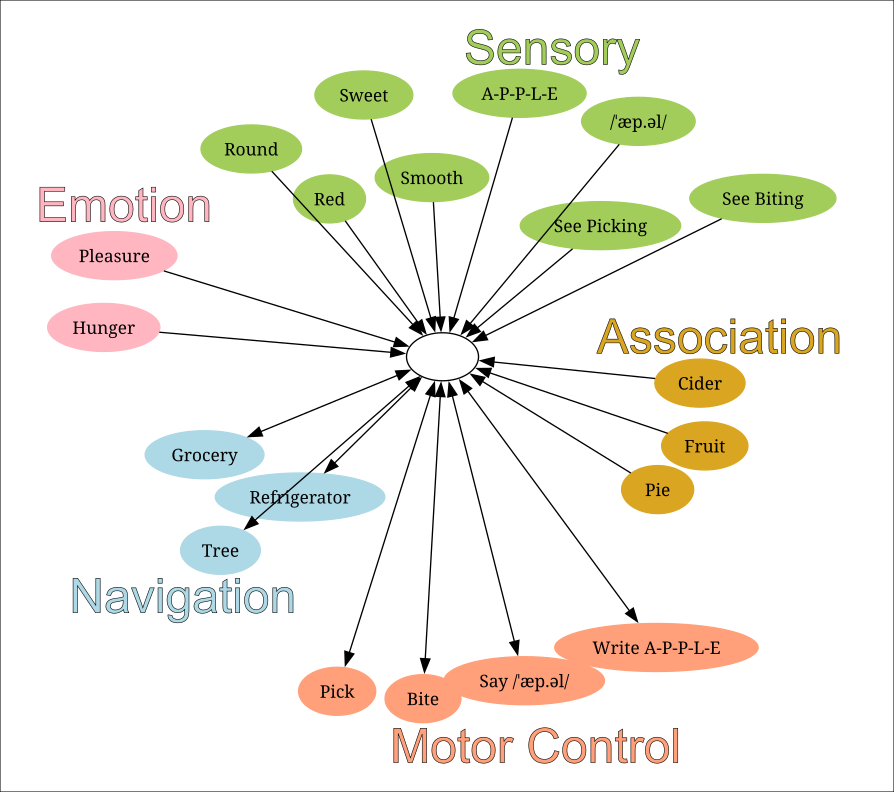

Below is a representation of the concept APPLE that is independent of formats or external representations. I’ve labeled most nodes with symbolic terms because that is how authors must communicate with their readers. However, these terms describe sensory inputs, motor control, emotional inputs, and cognitive maps which exist in localized regions of the brain and do not require words, formats, or external representations.

An Embodied Conceptual Network implicitly represents the concept of an apple. Not shown are dendritic links out of this center node to activate other neurons. Illustration by the author.

Granted, this is a simple example. The APPLE concept gets a lot more complex than this. THAT-APPLE means a specific instance of an apple. It could be a real red, green, or yellow apple or an abstract painting of an apple. AN-APPLE is a category signifying all apples. And then there is the manufacturer of iPhones and computers4.

There are several insights we can draw from this simple apple example:

The center node and its connections are unlabeled. The node represents or means APPLE by its connections to neurons activated in various locations in the brain.

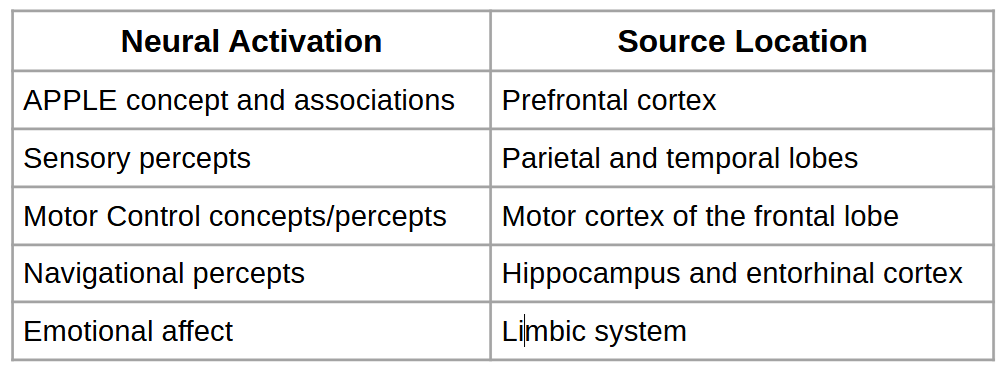

The neural triggers of the APPLE concept originate from multiple regions of the brain. The nexus of these triggers is most likely found in the prefrontal cortex. Triggers converge in the prefrontal cortex, where they activate multiple variations of the APPLE-related concepts to varying degrees (red apple, golden delicious apple, Asian pear). A winner-take-all circuit decides which nexus is the most valid concept.

The nodes pick, bite, and /ˈæp.əl/) are both perceptions and motor actions. We learn to pick, bite, and say apple by observing others picking, biting, and speaking.

If you remove the nodes corresponding to the hearing, speaking, writing, or reading of apple symbols, you still have an internal representation of an apple that a dog could understand. And perhaps even a honey bee (see my post What's So Great About Honey Bees?).

The green nodes all originate from the senses, but there are various senses. ROUND can be a visual and a tactile sense. RED, A-P-P-L-E, SEE-PICKING, SEE-BITING are all visual senses. /ˈæp.əl/ is aural. I cannot confuse one sense for another because each originates along a separate neural path. Therefore, I do not need to label sensory values with an attribute. All senses are independent of each other and individual senses are multi-dimensional (sight includes hue, intensity, saturation, spatial, and temporal (optic flow) qualities). This results in a very high dimensional description of an APPLE concept.

I think most readers will appreciate the value of perception in defining internal concepts. I want to focus on four other sources of conceptual attributes: navigation, emotion, motor control, and associative memory.

Navigation

Honey bees, humans, and a lot of animals in between have cognitive map structures that transform egocentric or body-centered viewpoints into allocentric or map-like viewpoints. Such a cognitive map is necessary for navigation. Transforming location information into an allocentric representation also makes communication possible because organisms can share a common viewpoint.

Honey bees and human both have heading cells which fuse multiple directional sensors in an attractor network5 to estimate a single heading or direction of travel. Bees and humans also share a cognitive structure called a location vector, which denotes the location of a landmark or destination from some starting point. Humans and mammals have additional navigational structures called place cells, grid cells, and border cells in the entorhinal cortex and hippocampus6.

Honey bees and humans both improve their navigation by recognizing landmarks. These are not simply objects to be recognized (like an apple) but objects that represent a spatial and/or sequential position or location. In my post What's So Great About Honey Bees?, I report on research in which honey bees actually count landmarks in order to reach a nectar source.

Animals that navigate and communicate socially possess these special navigational representations. This is important for our discussion here because place or location is an intrinsic part in defining a lot of objects. We expect to find apples in apple trees, at the grocery, and in the refrigerator. The conceptual network for apple shows arrows going both ways because standing in the fruit section of the grocery store can trigger the concept of apple.

For more on navigation, see my post A Honey Bee's First Orientation Flight.

Emotion

Emotions entail feelings that have a positive or negative valance (goodness/badness). These feelings manage homeostasis, they motivate behaviors, and they influence what we commit to long-term memory. We discuss two emotions that help to define an apple: one feels good and the other less so.

Sensory emotions are genetically determined feelings of pleasure or discomfort associated with sensory perceptions. The experience of eating an apple is both a sensory experience and a feeling of pleasure that comes from its sweetness.

Homeostasis is self-regulation of internal bodily processes. When the core body temperature becomes too high or too low, we sweat or shiver. If we exert ourselves, our heart and breath rate increases. These are automatic, internal processes, but not all homeostasis is an internal, automatic process. Sometimes a homeostatic emotion prods us with feelings to behave in ways that restore our internal balance…like when we get hungry. Hunger is a feeling with a negative valance. To make this discomfort go away, we eat. Apples are food we get pleasure from but they also relieve our hunger.

Emotion determines what experiences—good and bad—are worthy of long-term memory. Imagine biting into an apple and seeing half a worm in the apple. You might reasonably feel disgusted (yet another example of emotional affect) and spit out the other half of the worm. But this feeling might also sear the memory into your brain so that you would remember to check your apples for worm holes in the future.

For more on emotions, see my post Why Emotion Matters.

Role of Motor Control in Internal Representations

There is a deep lateral crease in the human brain, called the central sulcus, that divides it into a front half (frontal lobe) and a back half (parietal lobe). The parietal and the temporal lobes (on both sides of the brain) processes sensory data. Motor control and association (the prefrontal cortex) are the functions of the frontal lobe. Motor control does more than coordinate and move the muscles of our body. It aids in our cognition and learning. And it represents abstract actions, not simply our own personal actions.

When I bite into an apple, there are groups of motor neurons coordinating my grasp of the apple, widening my jaw, retracting my tongue, pushing the apple against my teeth, and biting down on the apple. Remarkably, these same motor neurons activate when I simply observe someone else biting into an apple7.

These are called mirror neurons because both action and observation of action activate them. As such, they do not represent one's own action as much as they represent a particular action in the abstract. Some believe that mirror neurons play a role in learning new behaviors by emulating or imitating others. At any rate, it is a representation for an abstract behavior.

Association

When I hear the word "pie", I naturally think of apple pie (unless I am standing in a pizzeria). "Cider" and "fruit" are words you might also associate with the concept APPLE as well. But not every experience results in a conscious association.

Most of what we experience never enters our consciousness, but it persists and influences our cognition. This unconscious influence, called priming, changes a person's ability to identify or classify a perception because of a previous encounter with that item. If I show you an image of a lizard and then ask you to finish the word beginning with "rep_", you are likely to respond with "reptile" even though there are more than 600 other English words that begin with "rep".

Modularity of Representations

I am skeptical of many attempts to localize functionality in the human brain. However, I do not doubt that modularity in the brain exists. The locations in the brain where these mental representations originate are uncontroversial.

One take-away in this chart is that a mental concept like APPLE does not exist in one place but requires the neural activations from nearly every part of the human brain.

Each organism's genetic code defines each modular location. Our internal representations come from our genetics and our experience in the world. This is in stark contrast with today's AI, which is programmed with knowledge content and formatting by software developers.

What About Abstract Concepts Like Freedom or Justice?

You can see, touch, smell, and taste an apple. When you bite into it, you will hear its crunch. It is a simple, sensual concept. It is a concept that might exist within a dog’s brain. What about concepts like democracy, money, unicorns, or doomscrolling? None of these exist as concretely as an apple. Each one is a product of human imagination. I call these transcendental concepts because they transcend the senses. Yuval Harari captured the essence of transcendental concepts when he said, "There are no gods in the universe, no nations, no money, no human rights, no laws, and no justice outside the common imagination of human beings."

In my post The Story of Intelligence, I describe how simple animals with egocentric viewpoints evolved into navigating and communicating animals with allocentric viewpoints. Once these animals began to innovate, they gained transcendental concepts and a viewpoint unbounded by our senses and reality itself. That process of innovation is described in some detail in The Story of Intelligence. I show how the transition from egocentric to allocentric to transcendental is recapitulated in evolution, development, use of signs, umwelt, and the cognitive process.

Thousand Brains Theory

I have tried to show how a mental representation can exist without formats or intervention from an external intelligent agent. I used the mental concept of an apple as an illustration. However, there is no single APPLE neuron any more than there is a single Jennifer Anniston neuron. There are probably thousands of each. Each APPLE neuron is slightly different but all compete in a grand winner-take-all competition. And the inputs to each neuron is a sparse, high-dimensional vector. This is consistent with Jeff Hawkins's Thousand Brains theory of the prefrontal cortex. I recommend his 2002 book, A Thousand Brains: a New Theory of Intelligence.

The Thousand Brains theory focuses on the prefrontal cortex. I find the theory attractive because it describes an architecture that is complex like the brain but simple in specification. A simple specification is something that can be evolved...like DNA.

Summary

In this post, I have tried to show where the elements for basic mental concepts originate from that feed into a real brain or a computer simulation of a brain. If we are ever to have computers that are smarter than we are, we will need internal representations that are independent of external intelligent agents.

There are other Substack posts on representation and meaning that have appeared recently. These are more philosophical treatments than what I have presented here. I can recommend a series of four essays on the topic of representation from Suzi Travis:

Essay 1 looks at Searle’s Chinese Room.

Essay 2 explores symbolic communication.

Essay 3 tackles the grounding problem — how words get their meaning.

Essay 4 addresses representationalism in neuroscience.

I use the terms internal representation and mental concept interchangeably.

The World Atlas of Languages has compiled 8324 languages, spoken or signed, as reported by governments, public institutions and academic communities. This would not include ancient, extinct languages. Roughly 7000 languages are in use today.

Steve Jobs originally conceived of a company that would sell personal computers to schools. Since APPLE is also a meme for affection for one's teacher, Jobs named his start-up company Apple.

I describe attractor networks in What's So Great About Honey Bees? In that post, I also argue that evolution exapted (a term for evolutionary re-purposing) part of our navigational faculty for reasoning and problem solving.

Moser MB, Rowland DC, Moser EI. Place cells, grid cells, and memory. Cold Spring Harb Perspect Biol. 2015 Feb 2;7(2):a021808. doi: 10.1101/cshperspect.a021808. PMID: 25646382; PMCID: PMC4315928.

Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996 Mar;3(2):131-41. doi: 10.1016/0926-6410(95)00038-0. PMID: 8713554.

https://agieng.substack.com/p/semantic-storage-ii

I agree the Grandmother neuron or the Jennifer Aniston neuron concept is just implausible, although it is frequently promoted in social media posts. I suspect this is because people want simple answers to complex issues. This is similar to trying to understand quantum physics without understanding the math: it just can’t be done.