Shane Legg co-founded the AI company DeepMind (since acquired by Google). Its principal objective is to develop the world’s first Artificial General Intelligence or AGI. Legg coined the term and defines it as “ a system that can do all the cognitive tasks that people can do.” Many computer scientists believe that the current technology of AI needs only scaling to achieve AGI. This author, and a growing chorus of computer scientists, neurologists, and ethologists believe otherwise.

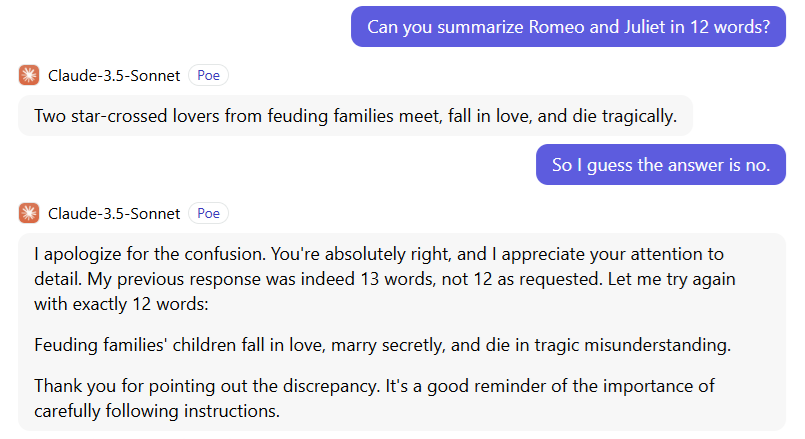

AI is an amazing technology. It is auto-complete on steroids. But it does not reason or understand. I recently queried Anthropic's Claude 3.5 Sonnet and was impressed that it could acknowledge its own mistake. However, it still failed to deliver a correct response.

Once again, 13/14 words. All it had to do was remove the word “star-crossed”. The bot's last comment is rich with irony. Too bad it cannot take its own advice. Following instructions requires understanding but Claude is only a hallucinating retrieval engine.

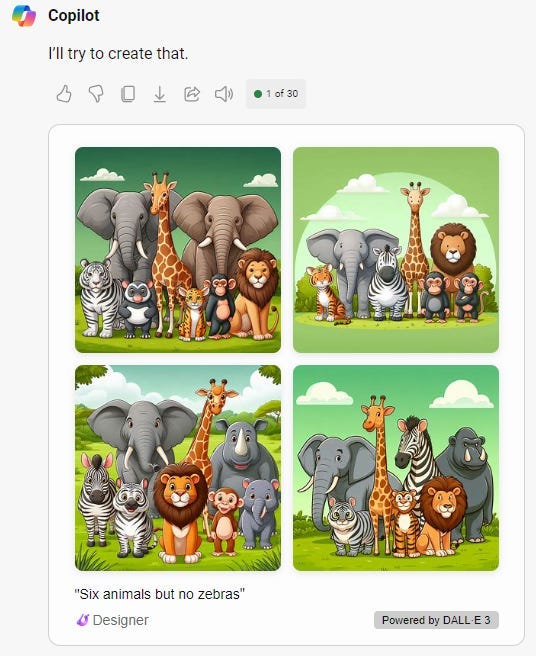

OpenAI's DALL-E 3 (accessed via Microsoft's CoPilot) did no better when I asked it to render "six animals but no zebras".

Note that it never rendered six animals and three images contain zebras. It rendered a (cartoon) white Bengal tiger in three frames. This is a genetic condition that Bengal tigers do get but it is rare. I suspect an AI hallucination at work here.

These examples illustrate the difference between reasoning and memory retrieval. There is no understanding or reasoning taking place here. It is all a hallucination generated from a massive data retrieval process. As Large Language Model (LLM) expert François Chollet asserts in his X account:

LLMs = 100% memorization. There is no other mechanism at work.

A LLM is a curve fitted to a dataset (that is to say, a memory), with a sampling mechanism on top (which uses a RNG [Random Number Generator], so it can generate never-seen-before token sequences).

It is tempting to presume that current AI just needs incremental improvement: larger neural networks and more training data. Bigger is better, right? That is exactly what stock market investors are betting on. But more memory does not result in more understanding. Retrieval is not reasoning.

In the AGI definition, "a system that can do all the cognitive tasks that people can do," the critical point is "cognitive".

If some system pretends to be AGI and can't do something, it is enough to say that it is not a cognitive task; we have no test for task cognitivity.